Predictions from the first AR OS

The software, hardware and UX considerations that will inform the architecture of the first AR OS

The software, hardware and UX considerations that will inform the architecture of the first AR OS

(This article was originally published in Hackernoon in June 2018)

There has been a lot written on the AR glasses rumoured to arrive over the next few years, but not much on the operating systems and core UI that will underly them. We can predict a few use-cases, some semi-dystopian visions of a world overlaid with data, but no real sense of what rules, tradeoffs and capabilities will define the moment-to-moment user experience.

This article looks ahead at the capabilities of a speculative HMD with an operating system built from the ground up for AR — what will it look like? What underlying concepts and considerations will shape the users’ experience? What rules and restrictions are imposed on developers?

As a startup CTO, I have to make concrete plans for a future that will be dictated by far larger players in the industry. This is difficult in a fast-changing landscape like AR, but by working backwards from observable limitations, it’s possible to take a guess at the environment our software may run in.

This article makes a few assumptions about the near-future:

HMDs will be released with an AR-OS built from the ground up, rather than a mobile OS. AR’s value can’t be fully realised solely as a feature on top of existing 2D UX (as we see from discovery, UX and monetisation challenges in mobile AR).

The fundamental concepts introduced in this AR-OS will be able to scale from the short-use early-adopter stage up to continuous all-day use.

Microsoft’s Holo UI is an example of a half-step into a full AR-OS, but as will become clear later, its’ task-focused approach is not quite a true AR-first UX.

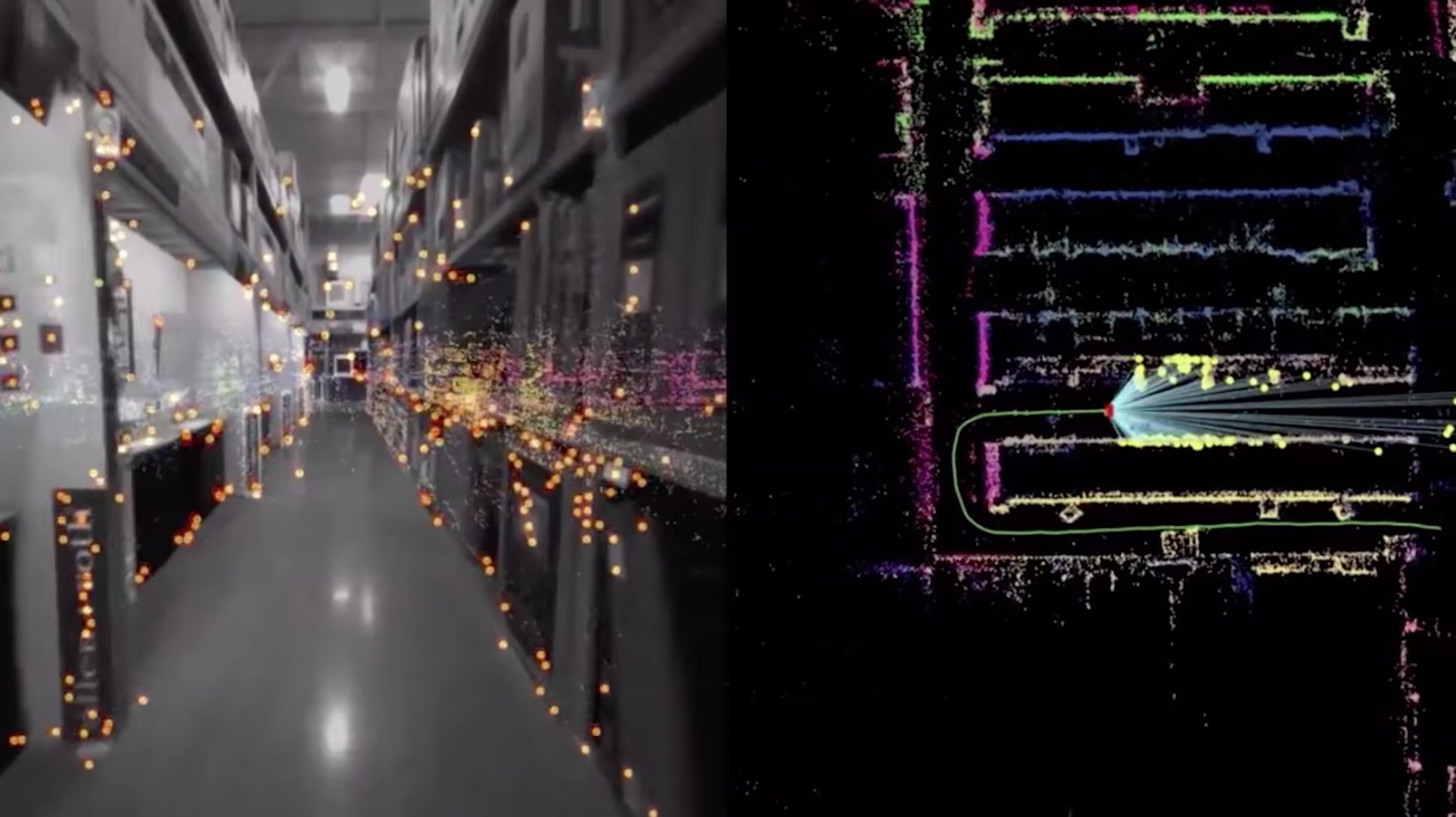

The glasses will allow for position-tracking, and sensors to recognise the user’s environment and 3D objects. Artificial Intelligence will provide contextual information and categorisation. Web services will be available for recognising flat images, 3D environments and objects from large databases.

At first AR glasses will be used for single specialized tasks like training or monitoring equipment on factory floors. Early session times will be short, due to comfort, bulk, and battery limitations, but will increase over time as hardware improves and software solves more problems.

The software that runs on an AR-OS can be divided into two broad types — Apps, and Tags.

Apps are similar to current desktop/mobile applications They are launched by the user, can be positioned (albeit in 3D space), multitasked between, and will stay open until closed.

Example: A carpentry reference app that you open and place on a table next to the object you’re working on.

Tags are more like autonomous apps that are continuously looking for a particular environment, context or object which they can augment with information or by altering the world visually. Until that moment, they are invisible.

Example: A building manager enables a tag that highlights any equipment with a reported fault when they enter a room.

Today, the basic concepts in a mobile OS are a continuation of the metaphors used since ancient-times on PCs and feature-phones (by which I mean the 90s). Applications focused on a single task are launched from a desktop or home-screen, and then accept input until closed or suspended.

This task-focused model won’t work for an AR HMD. When you pick up a phone you have already decided which task you want to carry out, and the home-screen UX is optimised around that; a grid of apps ready to launch and take up the screen. A phone OS only needs to care about its own display, and getting you to the right task ASAP.

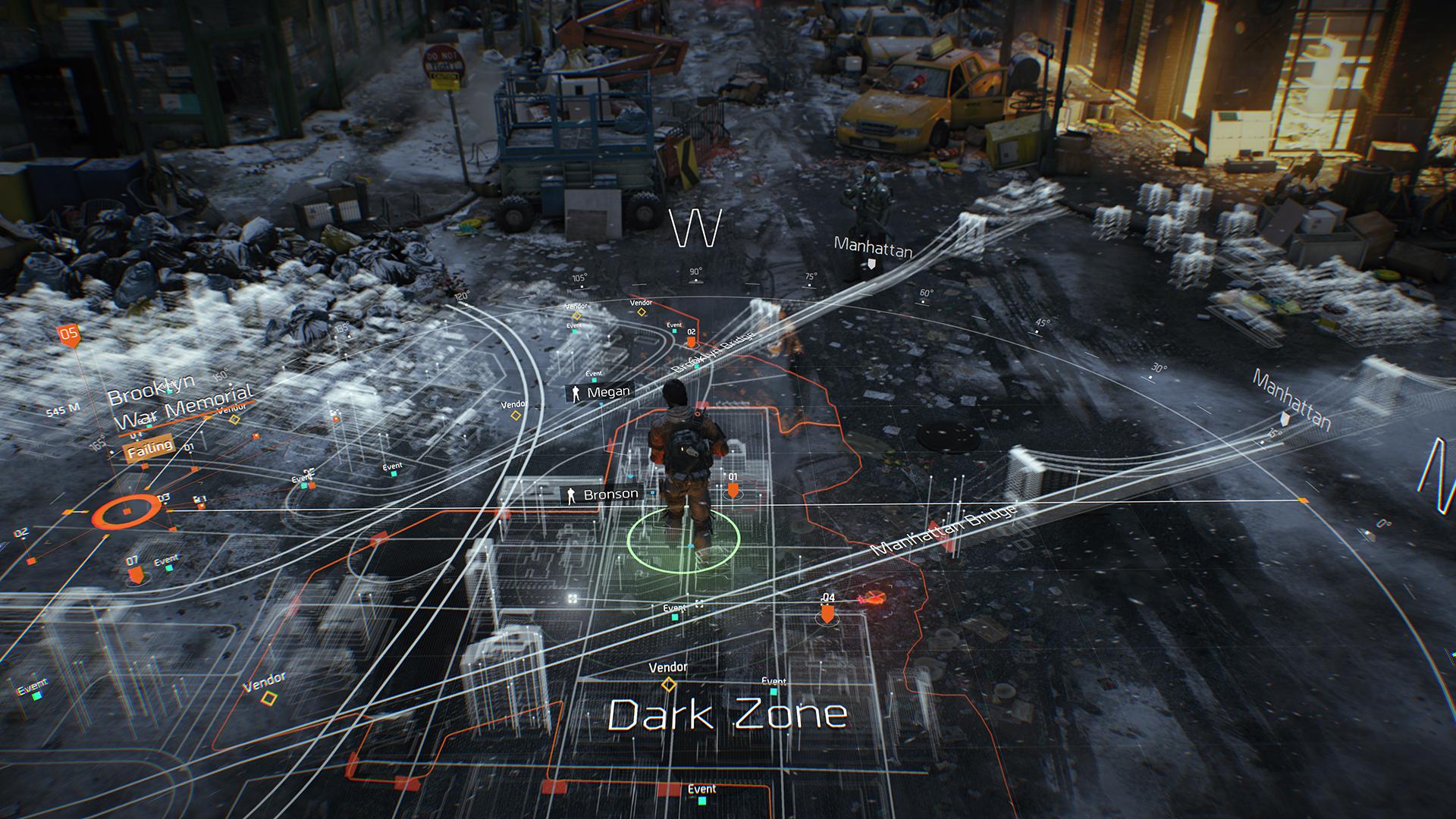

However an AR UI is continuously visible, sharing space with real-world physical tasks the user may be performing. The display being managed is the world itself: Having a grid of icons floating in your face while chopping vegetables is a poor experience, and only being able to display one task at a time is in the world is overly-limiting.

The ‘home screen’ of an AR-OS is context-based — actively interacting with and augmenting the world, not waiting for the user to select a single task. Most experiences on the device run not as standalone tasks that take over the entire display, but as apps or tags shown in-context within the world: A video plays on a wall, while on a counter a recipe shows the next step of preparation and a thermostat hovers by the door.

The way we organise our physical world is probably more relevant to AR than how we organise our 2D interfaces. In the real world we surround ourselves with extremely low-friction ways of consuming information and performing tasks (right now I can grab a TV remote, a notebook, a science book, a takeaway menu, my phone or a coffee without looking up). We perform multiple tasks at once, while changing locations and contexts, and rely on unfamiliar environments to signal what we can do in particular areas. Our relationship to our environment affects our way of thinking itself.

Task-focused full-environment apps will exist, but they are not the default building-block of an AR-OS; instead they will be a special state entered by the user. The AR-OS’s default mode is like a well-lit living room — all possible tasks visible but not demanding attention. A task-focused app is like turning the lights off to watch a movie — a temporary situation to minimise distraction.

Part of the power of AR is the ability to discover tasks and information naturally — by encountering them in the spaces we live in. Right now this capability is locked behind the limitations of mobile OS.

Mobile AR’s biggest challenge is the friction of needing to download and open a single-task app in the right context. Users may be willing to do it once for a brief branded experience, but as part of day-to-day life it simply isn’t scalable.

Discovery is also a huge issue for task-oriented AR apps in both mobile and an AR-OS. Users need to be prompted in the real world (‘Hey — download our app and scan this!’), or remember seeing some marketing to even be aware an AR experience exists at a location or on an object.

Tags place themselves in the environment automatically, without requiring the user to recognise they would be useful in a particular spot and launch them. Tags could include things like name labels on plants, calorie counts on meals, or historical points of interest.

More esoteric tag examples could include:

On top of user-installed tags, and tags enabled in the AR-OS by default, location-based tags that automatically show content from a location’s owner will be available. Imagine augmented exhibits in museums, or way-finding in a hospital that directs you to your specific appointment.

Context-based augmentation — entering a space and having additional information, interactions and aesthetics layered on top — is mostly uncharted territory in terms of architecture and UX, as it is only really possible via AR (though you could argue precursors include context-based tech like beacons on mobile devices).

Some of the possibilities (including the role of the web as a tag in AR, and ownership of spaces) are covered in the next article in this series.

The limitations of phone screens forced a step back from windowed multi-tasking UIs, to single-task. Multitasking on mobile is possible but still awkward to this day — a concession made due to the limitations of the hardware.

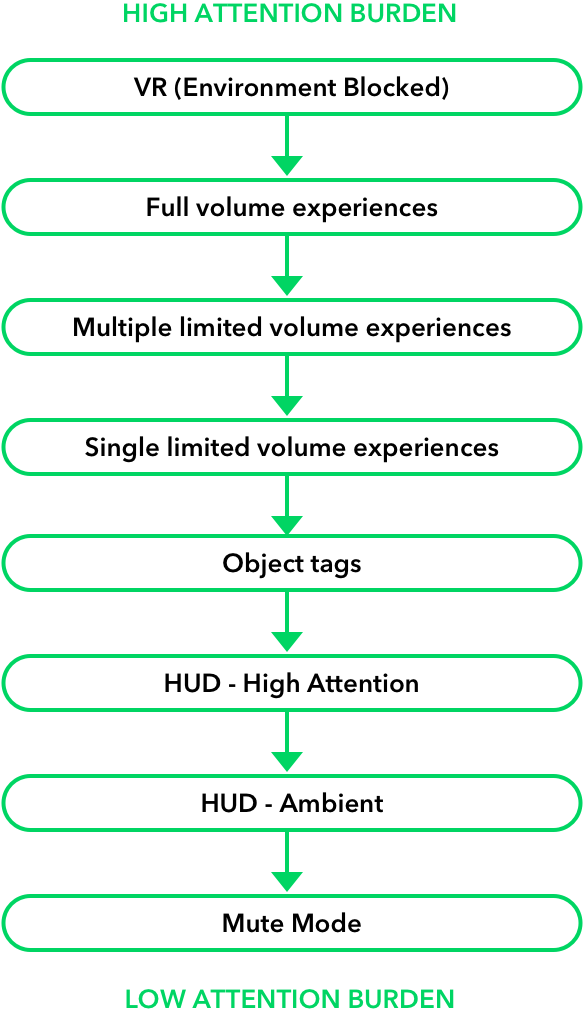

Despite having the ability to present information anywhere, in any form, an AR-OS will still be tightly constrained. This time however, the concessions are mainly to the user rather than the hardware — A user has perceptual/mental limitations such as attention and spatial comprehension that can easily be overwhelmed, causing frustration and discomfort.

An AR-OS will need to manage the resources of the user as much as the resources of the device itself, which will require some very careful, empathetic UX design. Attention will need to be treated as a limited resource to be managed, and balanced against the needs of the real world. Physical space will also need to be managed carefully, to ensure the AR-OS is always easily comprehensible.

With current devices, an OS can assume that if the user is looking at the display, they are devoting most of their attention to the task being presented. If the user wishes to shift their attention, it’s as simple as looking away.

In AR, the ‘display’ consists of everything the user can see, so the same assumptions cannot be made. If you fill a users space with whirling information while they try to do another non-AR task, they will become distracted and frustrated.

Users have a finite amount of attention they can pay to the world, so an AR-OS must manage attention as a resource. Its goal is to present only the information the user needs in the world, with as little mental burden as possible.

So, how do we manage the users attention in a way that minimises distraction? Some rules can be easily intuited:

Intensity

Complexity

Again, since we are dealing with a user’s visual cortex rather than their display, there is more complexity than traditional UI design. As just one example, designers will need to avoid startling their users:

These concepts are intuitive to reason about, but they can demand different ways of thinking from 2D UI design, encompassing ideas from architecture, way-finding, product-design, psychology and neurology.

Attention and Tags

Attention is simple to manage when the user is focused on a single activity — because the user has launched a task, we can assume they are intending to give it attention, which the creator of the task-oriented software can then design for. For example, a 3D editing tool would expect to take up most of the users focus and visual field, but an IKEA build guide might reasonably expect the furniture it refers to to be present and need to be visible.

However, a lot of the usefulness of an AR-OS comes from its ability to offer a range of information and actions based on the world around the user. In order to avoid distraction & annoyance this ambient UI’s goal should be to reach the lowest-attention state possible while still delivering the desired information.

It then follows that the ideal ‘low-energy’ state of an AR-OS is to look as close to plain reality as possible. It’s worth nothing that this is directly opposed to our movie-influenced vision of how future AR UIs look — filling the space in front of a person, or with densely-designed panels pinned all around a user.

This also suggests information presented by tags should be temporary but retrievable later- for example upon recognising a dish, a calorie-count tag may only have access to a limited space around the dish for a set period of time to show the count. The tag may also be limited to food the user is directly interacting with. As a result of these restrictions, this tag would not be able to permanently put counts above every object in a supermarket at once. This prevents both spammy and poorly-designed tags.

A notification system will be needed to manage these events and subtly prompt the user that something is available within a particular space even after the initial notification has passed. This notification system will be expanded on later in the series.

The OS will be aware of the available space around the user, which will vary greatly depending on their location (e.g. a phone booth vs a park).

Placing virtual objects ‘inside’ real objects or walls may be visually uncomfortable, especially if the real object is heavily patterned, as the disparity in depths creates a ‘cross-eyed’ sensation. Avoiding this means that the space that tasks and tags can occupy are limited, and vary between environments.

The OS will be expected to choose the best place to locate information and activities to minimise discomfort and distraction. Since so much of AR is contextual, many use-cases rely on information being presented next to or on the object being referred to, so activities will need to be able to request specific volumes of space.

As mentioned, overlapping experiences need to be avoided, so the OS will need ways of managing requests for access to the same volume, or presenting the user with a notification that information/an activity is available.

Details like activity type, total volume requested, anchor-point requested, task priority and current context will all need to be considered by the OS when assigning space.

This UI needs to allow many experiences in one location without taking up the user’s attention. Some potential solutions will be discussed in the next article.

The user understandably wants discretion over their privacy and how much data third-parties have access to. Trust will be a huge factor in how an AR-OS is structured, given the presence of cameras, microphones and other sensors in a device that may be worn continually throughout the day.

This issue of trust extends to others around the user as well. Although most of us already carry microphones & cameras on us (and use them continually), the backlash to Google Glass shows that HMDs inhabit a different mental category to smartphones (partly due to their intrusive & outlandish appearance). An AR-OS has an uphill battle with already-negative public perception, and needs to be far more restrictive with sensor access than smartphones in order to win any trust at all.

It’s also worth noting trust is also being placed in the system due to its ability to alter visual perception. Malicious (or simply ill-designed) AR software can obscure dangers in the environment, or cause dangerous sudden visual distractions.

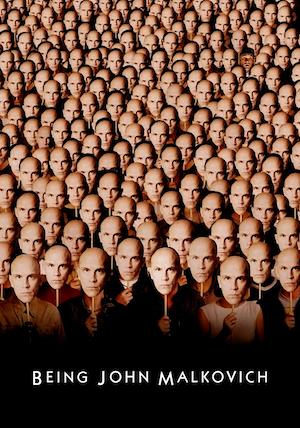

For full-time use, the user needs to have a level of trust in the OS close to airline autopilot or banking software. As previously mentioned, most of us have been carrying cameras and microphones in our pockets for a decade, and staring at them most of the day without much security beyond the occasional sticker on a laptop webcam. The head-mounted nature of AR glasses is much more viscerally personal, and any intrusion would be immensely disturbing. Someone with access to those sensors is hijacking our entire life experience, hitching a ride in our head.

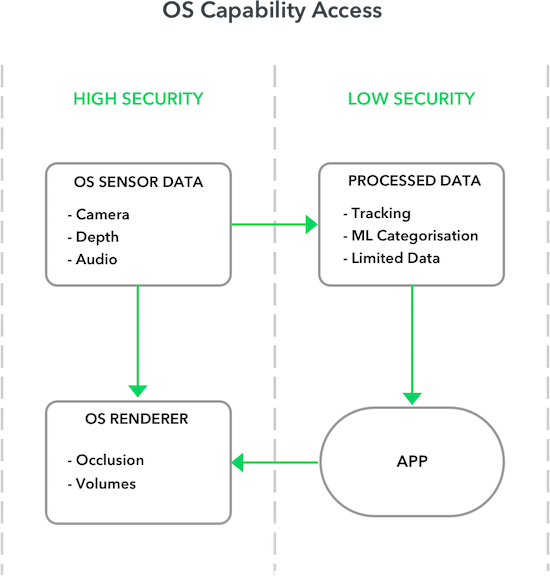

The default for any third-party code on the device is that it does not have access to raw sensor data, including video from the cameras, depth/environment data, and microphone. This is essential to preserve the privacy of the user.

An environment mesh built from the users’ surroundings can be accessed by apps at a limited rate, and within a particular volume assigned by the OS’s spatial management. This prevents malicious code from building high-resolution maps of a user’s environment, while still allowing placement of objects on surfaces or other basic environment interaction.

The most common use of the environment mesh is for occlusion — blocking 3D rendered objects when they are behind something in the real world (like under a table). This is handled securely at the OS level by the renderer/compositor, allowing for high resolution visual interaction with the full environment, without exposing the data itself.

By default, tags have access to a narrow range of notification/display methods standardised by the OS — A current example would be how push notifications work on mobile; they have a fixed UI design and their behaviour is limited to whats allowed by the OS.

Tags can request additional notification privileges in ambient-use mode to allow for more dynamic behaviour. This allows tags to place content in volumes immediately adjacent to objects of interest, or location tags to display automatically.

A travel tag with default privileges would pop up an OS-provided text box saying ‘Flight Boarding at Gate 11’, whereas a tag with more privileges could show a glowing pathway to the gate.

Apps in task-oriented mode can also request extra privacy permission, allowing access to raw video, depth and microphone data instead of only the default position-tracking data.

Apps can also request access to full environment meshes and social permissions, which would allow features like pinning an object in your home and having a family member see it in the same place.

Above-all the user must be aware an app has direct access to sensor data for the entire duration of its access. A visual indicator will probably be required at the OS level — think the now-universal ‘red dot = recording’.

A Note on Machine Learning & Security

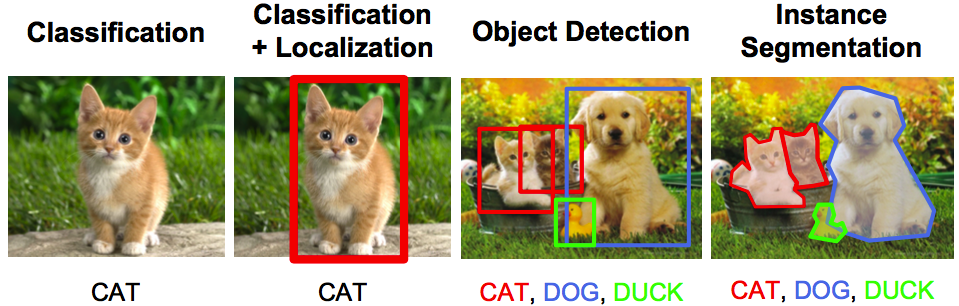

Machine learning is a powerful tool for the AR-OS, but also presents its own challenge due to needing full access to sensor data and having a huge range of possible uses. Common ones include recognising and labelling objects, performing visual searches, and anticipating the user’s needs/actions.

A range of simple, composable default models are included with the OS, available to apps, and are carefully designed to not reveal private information.

Custom ML models can also be created by third-parties, but require explicit approval by the OS-maker. The complexity of testing these models, and high risk of leaking private data suggests this might not be an option open to the general public, but rather specific strategic partners.

Context describes creating an OS-level awareness of what the user is doing at that moment, and transforming the display of information appropriately.

It’s a bad experience to have calorie counts, song names and incoming email alerts bouncing in your field of view when trying to have a heartfelt conversation with someone.

Mobile devices took a swing at managing real-world contexts with ‘profiles’ on phones — presets that alter notifications, ringer volume and vibration settings. However, since these need to be manually set this puts the onus on the user to constantly change settings according to their location and actions, an unreasonable amount of friction.

In all cases priority is need to be given to people in the environment; with the assumption that the user’s focus is on whoever they are talking to. Notifications need to be dialled down and OS attention & volume management put at maximum during conversations.

Luckily machine-learning may be a ‘good-enough’ solution here. A series of AI models including facial recognition, motion detection, location labelling and object categorisation could act as inputs into a context-focused model (or hard-coded decision-tree) to determine whether the user is engaged in a conversation.

For example; the facial recognition model reports that a face is present in front of the user, the motion model reports that the user is most likely sitting, the audio model reports a real (non-video) conversation is happening, the location model reports that the environment resembles an office, the object categoriser recognises pens, papers and a conference table. From these cues it’s reasonable to assume the user is in a meeting, or at least engaged in a discussion at a workplace, and does not want to be distracted unless it’s of some importance.

The approach taken here could vary from hard-coded rules to a fuzzier approach trying to infer the users available attention.

Some examples of contexts in which an AR-OS adapts its behaviour:

The above contexts all have distinct expectations from the user:

Concentration vs Exploration- How much the user is focused on a task, and how much they would be receptive to additional information and possibilities.

Privacy — The reasonable expectation of privacy in the situation. Whether the device should set its own mute mode, or prompt the user to set it.

Navigation- Whether the OS should sensitive to the users need to see and navigate where they are going while they are in transit.

Mobility — Whether the OS should be prioritising tags on objects & volumes within arm’s reach or further out in the world, due to the user being seated or free to move around.

Social — Whether the user is engaging with other people, and whether those interactions shouldn’t be interrupted.

Contexts can also be used to trigger certain tags, for example calendar & notes tags may appear during phone calls, or a favourite route-planner when entering train stations.

Mute Mode

For moments where the contextual system fails, where the user anticipates their own needs, or where they want to guarantee no distractions, they can enable a ‘mute mode’. This fully disables cameras, positional sensors, and the display until the user resumes them.

At the beginning this is less of an issue as users are putting on AR glasses for specific tasks they are comfortable with, but once users move to full-time wear it will become important for context to be handled sensitively and sensor-state clearly communicated to both the user and others. At the beginning this may be as simple as taking on/off the glasses.

I have made a few underlying assumptions that (if wrong) could easily change the nature of an AR-OS:

This assumption is one most would take for granted, but the one I’m least certain about — after all the iPhone itself launched without the app store. The complexity, UX considerations, and privacy implications of third-party code make app stores less attractive to glasses-makers; at least at the beginning. The first glasses to have a security flaw allowing remote camera access will be a global news event.

However, the billions of dollars of revenue provided by app stores, and sheer cultural momentum of what constitutes a hardware platform does make an app store likely , even if those apps are severely limited by permissions.

I’ve glossed over what I see as the two biggest barriers to AR glasses — social stigma and battery life.

I don’t think the aesthetic drawbacks will be insurmountable (I remember what people looked like when bluetooth headsets first arrived), but I think the road will be long and paved with plastic reminders that fashion is a difficult discipline in itself.

Battery life speaks for itself — all-day use simply isn’t possible yet. There is promise in lower-energy displays that project directly onto the retina, but continual processing of a 3D environment will remain an expensive process.

Here’s what we've been up to recently.